Any developer, junior or senior, should know and understand the basic rules of database normalization since they are the foundation of relational DBMS. Edgar Codd introduced the concept in 1970 and since then, most of the architectures have been following his rules. However, for high-traffic websites, sometime de-normalized is better than normalized, resulting in faster queries, less swap space and less storage space.

Although this post is not exactly about normalization, another common recommendation is to use a join rather than multiple single queries. In other words, rather than doing “select * from a where condition1” then “select * from b where condition2”, if a and b are in a relationship, it’s better to usually run a query like “select * from a inner join b on a.id = b.aid where conditions”. A classic example is when using categories for a certain object, say movies. Let’s say each movie can have a category and you want to display a list of all movies and the category that movie is in. Some developers might write the following php code:

connect_to_database();

$rs = mysql_query('select * from movies');

while( $row = mysql_fetch_array($rs) ) {

$rs2 = mysql_query('select * from categories where category_id = ' . $row['category_id'];

$row2 = mysql_fetch_array($rs2);

$category = $row2['category_name'];

echo $row['name'] . "<br />Category: " . $category . "<br /><br />";

}

but there’s a simpler and (most of the time) better approach:

connect_to_database();

$rs = mysql_query('select * from movies m inner join categories c on c.category_id = m.category_id');

while( $row = mysql_fetch_array($rs) ) {

echo $row['name'] . "<br />Category: " . $row['category_name'] . "<br /><br />";

}

The first approach generates 1 + N queries, where N is the number of movies. If N is a big number, things might take a while… The second approach only takes 1 query, leaving the hard part to the internal RDBMS engine. Well, sometimes, in certain scenarios, the first version is the right choice. It’s THE solution.

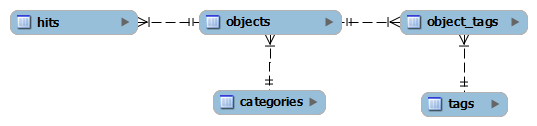

Let’s assume there are 4+1 tables – the main one, call it objects, one for tags, one for categories, another one called hits which will track the number of times a certain object has been seen by a user, and the last one for storing the n-to-n relationship between tags and objects, call it >code>object_tags. The ERD is rather simple:

Also, let’s assume we want to show a list of objects, with all their tags, their current category and the number of hits it has. The results will be paginated, only showing 20 items per page. Simple, right? Anyone would tend to write something like this for the first page:

SELECT * FROM objects o

INNER JOIN categories c ON c.category_id = o.category_id

INNER JOIN object_tags ot ON ot.object_id = o.object_id

INNER JOIN tags t ON t.tag_id = ot.tag_id

INNER JOIN hits h ON h.object_id = o.object_id

LIMIT 20

This was what I wrote too, more or less. However, after spending many hours trying to figure out why that page was loading in more than 100 seconds, whenever it was loading at all, I’ve learned that sometimes it’s better to break the rules and not follow any recommendations. So I tried the following code, and the results were staggering – less than 1s loading time:

$rs = mysql_query('select * from objects limit 20');

$categories = $tags = array(); //used for locally caching categories and tags

while( $row = mysql_fetch_array($rs) ) {

if( !isset($categories[$row['category_id']]) {

$rsCat = mysql_query('SELECT category_name FROM categories WHERE category_id = ' . $row['category_id'] . ' LIMIT 1';

$rowCat = mysql_fetch_array($rsCat);

$categories[$row['category_id']] = $rowCat['category_name'];

unset($rowCat, $rsCat);

}

$objectTags = $toLoad = array();

$rsObjTags = mysql_query('SELECT tag_id FROM object_tags WHERE object_id = ' . $row['object_id']);

while( $rowObjTags = mysql_fetch_array($rsObjTags) ) {

if( isset($tags[$rowObjTags['tag_id']]) ) {

$objectTags[$rowObjTags['tag_id']][] = $tags[$rowObjTags['tag_id']];

}

else {

$toLoad[] = $rowObjTags['tag_id'];

}

}

unset($rsObjTags , $rowObjTags);

if( count($toLoad) ) {

$rsTags = 'SELECT tag_id, tag FROM tags WHERE tag_id IN (' . implode(',', $toLoad) . ') LIMIT ' . count($toLoad);

while( $rowTags = mysql_fetch_array($rsTags) ) {

$tags[$rowTags['tag_id']] = $rowTags['tag'];

$objectTags[$rowTags['tag_id']][] = $rowTags['tag'];

}

}

unset($toLoad, $rsTags, $rowTags);

$rsHits = 'SELECT today_hits FROM hits WHERE object_id = ' . $row['object_id'] . ' LIMIT 1';

$hits = mysql_fetch_array($rsHits);

//ready to display the data

echo $row['object_name'] . ' is in category ' . $categories[$row['category_id']] . '<br />';

echo 'Tags: ' . implode(', ', $objectTags) . '<br/>';

echo 'Hits today: ' . $hits['today_hits'] . '<hr />';

}

I hope there aren’t too many mistakes in the code, I wrote everything in here without actually testing it.

Seems like a lot of work and totally not optimized for something quite straight-forward. However, let’s assume once more that the tables above have the following number of records:

objects – 50,000

categories – 500

tags – 100,000

objects_tags – 250,000

hits – 20,000,000

In this case, 4 joins would result in a huge dataset, requiring a lot of memory, and eventually ending up on the disk. Also, the LIMIT doesn’t help at all, since the dataset is truncated at the end, after scanning all the necessary rows. However, in the unoptimized version we do benefit from the LIMIT, by only getting a fixed set (20 rows) from 50k rows. The rest of the queries are acceptable because they are all primary key based, they are using “LIMIT” as well (3 out of 4), and because their number is rather small – somewhere between 45 and 80 queries.

Hope this helps someone some day. I’d like to know your thoughts, what would have you done? Any other quick fixes for this problem?

Cristi MySQL mysql, performance